All electronic force measuring systems, including industrial weighing systems, consist of a series of components. These components carry the end-to-end signal flow, from the force sensor(s) to the output display or control system. Each of these components can introduce error in the final measurement. This becomes even more true with time as the components wear.

Calibration is the process of comparing the system’s actual output signal or weight indication to what it “should” be, and adjusting the system so that it outputs the correct value within an acceptable tolerance. The “should” value is determined by weighing a known standard traceable to an authoritative primary standard. This article explains the process of calibrating the force measuring system to ensure its accuracy throughout its service life.

The sections below explain the following topics:

- The Measuring System

- Errors in the Measuring System

- Factors for Proper Calibration

- Standards and Traceabillity

- Calibration Methods

- Steps for Calibrating the Measuring System

- Frequency of Calibration

The Measuring System

NOTE: In this article, we refer to the measured quantity as the measurand. (This term is consistent with International Standards.) The measurand of interest here includes load, weight, force and pressure.

As mentioned before, a deployed measuring system in the field is a sum of many parts. Although maintenance calibrations on the system usually occur on the whole end-to-end apparatus, those individual components matter. If the system as a whole does not calibrate within expected tolerances, these individual components would need to be calibrated individually to see which is introducing error.

A measuring system’s individual elements usually include the following:

- Input transducer(s): Weigh systems collect input through one load cell, or multiple load cells whose output signals connect to a signgle summing box. These devices convert the input force to an electrical signal.

- Signal conditioners: As the name suggests, these condition the signal by filtering out noise and amplifying the load cell output signal, which is of the order of millivolts. Filters, isolators, and amplifiers are all examples of signal conditioners.

…and one or more of these output devices: - Monitoring system: A device that converts the signal conditioner’s output to a human-readable form, such as an LCD device.

- Data storage device: This converts the measurement signal to a data storage format for future use.

or - Control system: This uses the signal conditioner output as input data for a process (such as dispensing or flow control). That is, the output becomes part of the feedback loop of the industrial process.

Our article, Measurement Uncertainty in Force Measurement, explains the potential errors or theoretical uncertainty inherent in each of these components. These are important to know when calibration gives outlier results. However, this article will continue to look at calibrating the measuring system as a whole, since this is fundamental to maintaining a deployed measuring system. By regularly adjusting it’s output to the expected value within an acceptable tolerance, the system should provide years of accurate, reliable service.

Note the calibration tolerance may not only be based on the manufacturer’s specified value. The tolerance may also depend on other factors including process requirements and the available test equipment’s capabilities. We’ll give more details later.

Errors in the Measuring System

The definition of error is the difference between the output from the measuring system and the actual value of the measurand. Whereas measuring system errors are unavoidable, adjustments to the system made during its calibration process clearly reduce this difference.

There are two categories of errors in measuring systems: systematic errors and random errors.

Systematic Errors

Systematic errors occur inherently during the normal use of measuring devices. They generally occur if system technicians improperly handle or operate the instruments. These errors can only be mitigated through frequent calibration and proper training.

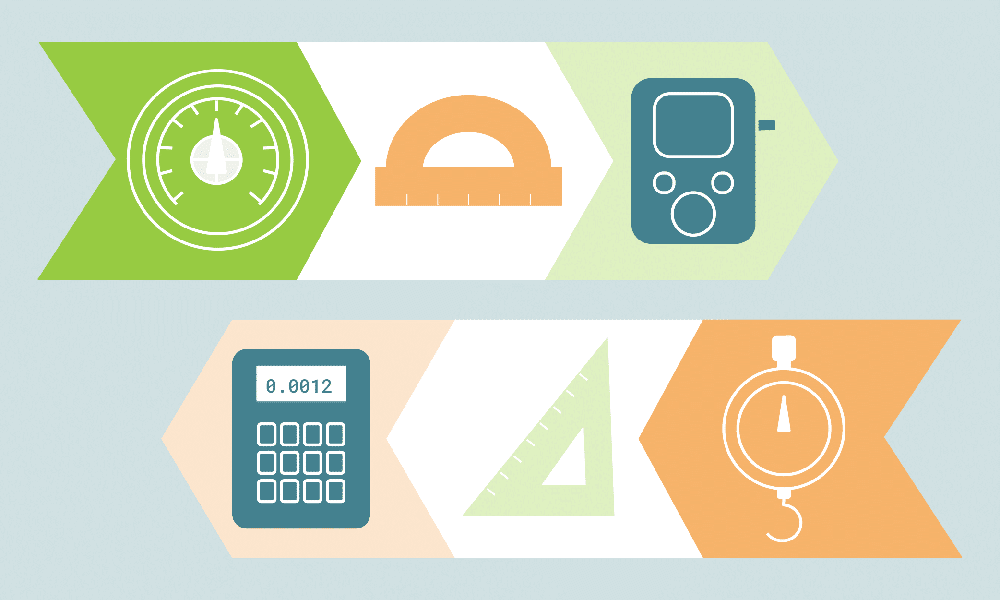

The two main types of systematic errors are zero errors and span errors. Zero errors occur when the measuring system reflects a non-zero output at no load. An instrument or device with a zero error will produce an input-output curve parallel to the true measurement value. (See Figure 1.)

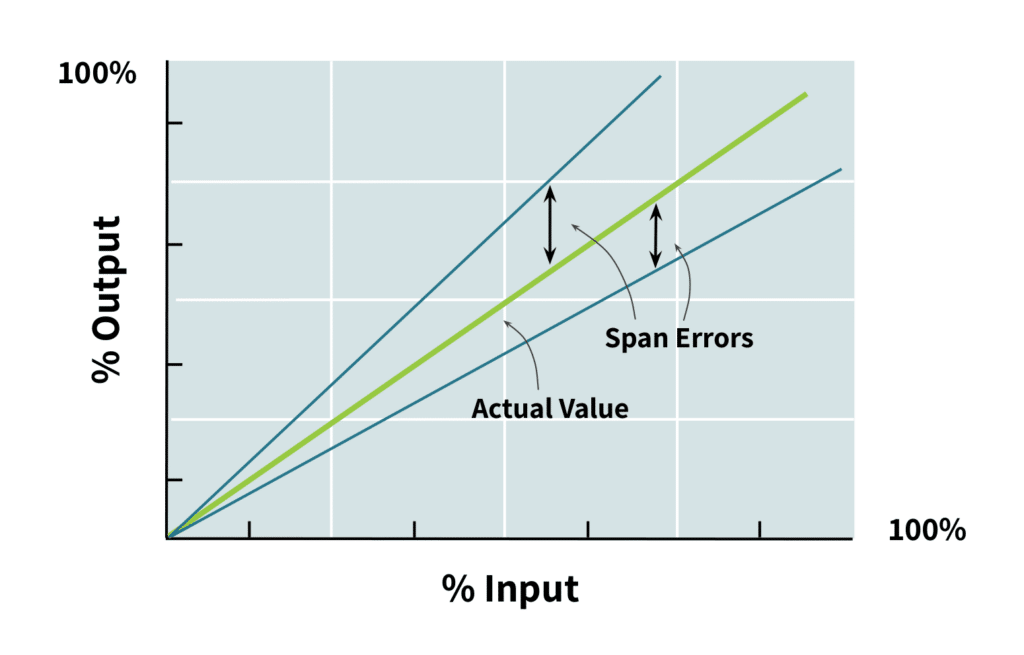

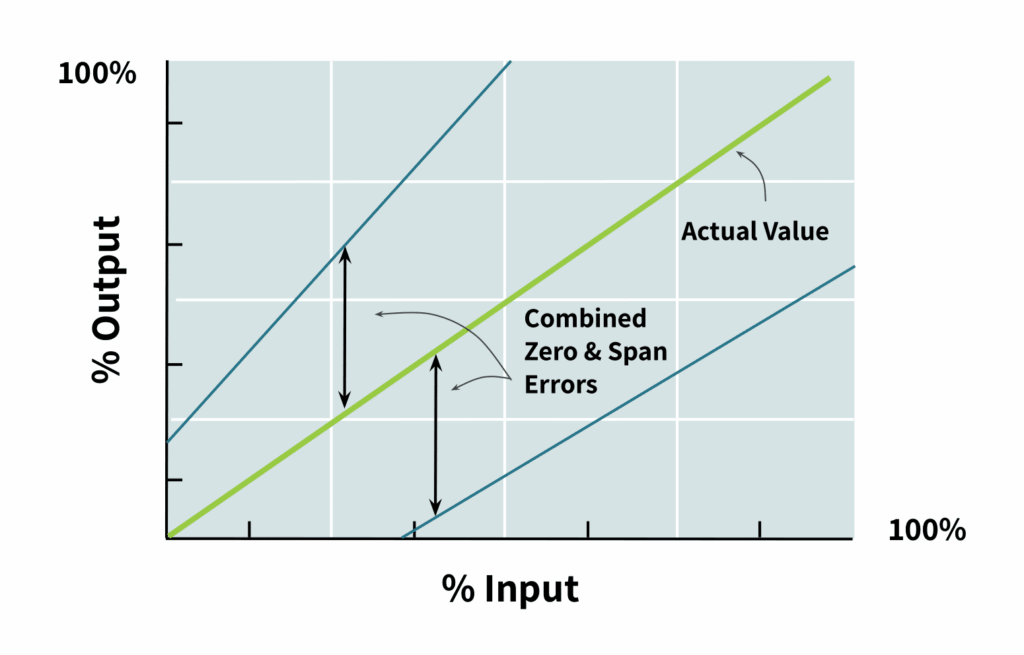

Span errors cause the slope of the measuring system’s input-output curve to differ from the slope of the true measurement input-output curve. (See Figure 2.)

The combination of these two error types forms a compound systematic error (see Figure 3). This error widens over time with system use.

Random Errors

Random errors are statistical variations (both positive and negative) in the measurement result. Precision limitations of a device, especially under varying environmental factors, lead to these errors. For example, electrical noise and variations in temperature or other environmental conditions lead to these random errors.

Even though random errors appear unpredictable, they often have a normal distribution about their mean value. Therefore, they are quantifiable using statistical calculations from data gathered from repeated measurements. As the document Measurement Uncertainty in Force Measurement explains, these errors are expressed as an uncertainty to a number of standard deviations. That is, based on test data, an error can be expressed as its mean value plus or minus three standard deviations with a confidence interval of 99%. Random errors include non-linearity errors such as hysteresis and creep.

In practical applications, the total measuring system error is expressed as the known systematic error plus the uncertainty due to random errors.

Sources of Error

Calibrating the measuring system increases its accuracy. However, so does proper technique in building the system, protecting it from harsh environments, and loading the measurand. Therefore, technicians should understand the ways that they may introduce errors in the measuring system. These sources of error include:

- Environmental factors. Examples include the aforementioned temperature and electrical noise. They also include other environmental conditions such as air pressure (altitude), electromagnetic interference, and vibration from other systems.

- Inconsistent measurement methods. Each measurement must follow the same set of procedures on the measurand to avoid introducing other variables into the measurement. Examples include placement of the measurand in the device or even the rate of application of the load.

- Instrument drift: Instruments, over time and with use, eventually drift from their original settings, motivating the need for frequent calibration.

- Lag: All load cells oscillate like a spring and have a delay in reaching their steady state. Taking a measurement reading before the load cell reaches steady state will cause an inaccurate reading.

- Hysteresis. Similar to lag, a device in the measuring system may not return to equilibrium due to “memory” caused with use. The result is a different output between the increasing and decreasing force, at any given force. In other words, the output of a load cell at a given force depends on whether as the load increases from zero to that load, or it decreases from rated capacity to that load.

Factors for Proper Calibration

According to the International Society of Automation (ISA), the definition of calibration is “a test during which known values of a measurand are applied to the transducer and corresponding output readings are documented under specified conditions.” These “specified conditions” for proper measuring system calibration include the following:

- the ambient conditions

- the force standard machine

- the technical personnel

- the operating procedures

As stated previously, variations in these conditions introduce random errors, increasing the uncertainty of the overall measuring system.

Ambient Conditions

The ambient conditions refer to the temperature and the relative humidity in the testing environment. They also refer to altitude of the testing facility; since air pressure varies at different altitudes, this can have an effect on the output of the system. It also refers to any vibrations from surrounding processes, wind, electromagnetic interference, or any other specifically environmental factors that can alter a precise reading.

When a load cell data sheet lists the predicted errors, these are given based on tests done at specific ambient conditions. If the load cell will be deployed at a different altitude or humidity or the like, the measuring system will require recalibration and/or compensating electronics to give an accurate result.

Force Standard Machine

The force standard machine refers to the reference standard instrument used to verify testing machines. Its measurement uncertainty should be less than one-third of the nominal uncertainty of the calibrated instrument. This reference equipment provides the reference measurements at intervals in the range of least and greatest applied calibration forces. The force standard machine accuracy is checked periodically using a higher standard machine, which is in turn checked by a more accurate machine, and so forth; the most accurate force standard machine in this chain must be traceable to the SI units by accredited laboratories like the National Calibration Laboratories (NCL) and the National Metrological Institute (NIM). See “Standards and Traceability” below.

Technical Personnel

The technical personnel must be well-trained, qualified system technicians. They must perform all measurement and calibration procedures according to the equipment manufacturer’s manual, or the laboratory’s documented procedure. These personnel must have knowledge of pneumatic, hydraulic, mechanical and electrical instrumentation. Moreover, they must have received training in subjects of dynamic control theory; analog and digital electronics; microprocessors; and field instrumentation.

Operating Procedures for Calibration

Beyond technical knowledge, technicians must have keen attention to detail to ensure strict adherence to operating procedures. They must clearly document all steps and results to ensure the integrity of the calibration results. Each calibration should produce clear documentation of the date, steps followed and the environmental conditions. It should also include the weight range and intervals used, the mode (tension or compression), and the identifiers of the system or load cell, such as the serial number. This is imperative to meet quality process standards such as ISO 9000.

Not every organization has the equipment to perform proper calibration on the measuring system. In those cases, it’s best to choose a manufacturer with the expertise to offer load cell calibration services.

Standards and Traceability

When we talk about standards relating to calibration, we refer to two different but related concepts. One definition of standards refers to the actual requirements for the performance of different types of load cells or measuring systems. These are established by several bodies including the National Institute of Standards and Technology (NIST) in the US, and the International Organization for Legal Metrology (OIML) globally. An explanation of standards from these bodies is in the following two articles: Load Cell Classes: NIST Requirements, and Load Cell Classes: OIML Requirements.

The other definition of standards refers to known quantities (weights, forces, or instruments that apply these forces) used as benchmarks to determine the accuracy of the instrument subject to calibration tests. These standards have three classifications: 1) the primary force standard; 2) the secondary force standard; and 3) the working force standard.

According to the American Society for Testing and Materials (ASTM) standard ASTM E74, Standard Practices for Calibration and Verification for Force-Measuring Instruments, the following are the definition of each of these standards.

Primary Force Standard

A primary force standard is a dead weight force that is applied directly to the measuring system without any intermediate mechanism such as levers, hydraulic, multipliers or the like. Its weight must have been verified by comparing it with reference standards, traceable to a national or international standard of mass. (Interestingly, a testament to the importance of these standards is the fact that the US Constitution, Article 1, Section 8 gave Congress the power to “fix the standard of weights and measurement.”)

Secondary Force Standard

A secondary force standard is an instrument or mechanism whose calibration has been established by comparison with the primary force standard. In order to use a secondary force standard for calibration, it must have undergone calibration using a primary force standard.

Working Force Standard

Working force standards are the instruments that verify the accuracy of testing machines. Their calibration may be by comparison with primary force standards or secondary force standards. They are also called the force standard machines.

Traceability

Traceability refers to the chain of comparison of a standard to another more accurate standard, which has been compared to another more accurate standard, and so forth. The most accurate standard in this chain must be established by the authoritative standards body that maintains that standard of weight or measurement. At each level, the test standard should be about 4 times more accurate than the system using it for calibration.

Calibration Methods

There are four primary methods of calibrating a force measuring system: 1) zero and span calibration; 2) individual and loop calibration; 3) formal calibration; and 4) field calibration.

Zero and Span Calibration

Zero and span calibration eliminate the zero and span errors of the instrument. Most measuring systems have adjustment knobs or similar for these purposes. Zero adjustments reduce the parallel shift of the input-output curve shown in Figure 1. Meanwhile, span adjustments change the slope of the input-output curve shown in Figure 2. Tacuna Systems’ instruments allow for both zero and span adjustments (see this manual).

Individual Instrument and Loop Calibration

Individual instrument calibration is as the name suggests. It calibrates each component in the measuring system in isolation. Each component is first decoupled from the measuring system. Then, a known, standard source provides a range of inputs at known intervals to the component; the output is measured for each input with a calibrated readout or data collection device. Again, the technician must record each input and output meticulously.

Loop calibration, on the other hand, tests the measuring system as a whole. A known, standard force is applied to the loading platform of the entire system. The technician then records the output displayed on the system’s readout. This has the advantage of verifying the whole system all at once which saves time. If the system is able to meet the required tolerances through adjustments during the process, this type of calibration is sufficient.

Formal Calibration

Formal calibration refers to calibration procedures in an approved laboratory. This type of calibration is necessary for instruments whose use requires certification by a standards body, traceable to primary standards, for official measurements. This type of calibration requires highly controlled temperature, atmospheric pressure and humidity.

Formal calibration of the system entails calibration of each component: the transducers, displays, measuring, analyzing and computing instruments.

Field Calibration

Field calibration is a field maintenance operation at the installation site of the measuring system. This ensures there is no deviation from the normal operation, and outputs are still within maintenance tolerances. (See Load Cell Classes: NIST Requirements for a more detailed description of maintenance tolerances.) Field calibration determines the repeatability of the measurements and not the absolute accuracy of the measurement.

Note that any alterations to load cell cables could affect field calibrations and conformance to tolerances on the load cell data sheet. See our FAQ for further details.

Steps for Calibrating the Force Measuring System

Different approaches exist for calibrating a force measuring system; however most generally follow the steps below, in a stable, controlled environment to the extent possible.

- Set the input signal to 0%, that is zero or no-load. Then adjust the initial scale of the measuring system to reflect no load.

- Next, set the input signal to 100%, or the full-scale output capacity. Then adjust the full scale of the measuring system.

- Reset the input signal back to 0% and check the system’s output readings. If the value is more than one-quarter of the rated value on the instrument’s datasheet, readjust the scale until it falls within the tolerance level. This is also called the zero adjustment.

- Reset the input signal to 100% and check the instrument’s output reading. If the value is more than one-quarter of the rated value on the instrument’s datasheet, then readjust the scale till it falls within a tolerable range.

- Repeat steps 3 and 4 until the zero-balance and the full scale are within the tolerance of one-quarter of their nominal values. This is the span adjustment.

- The calibration procedure should define the calibration range (the maximum and minimum weights in the calibration), and the measurement intervals in the measuring cycle. If the cycle has 5 intervals, apply loads sequentially as 0%, 20%, 40%, 60%, 80%, 100%, 80%, 60%, 40%, 20%, 0% of the calibration range. If it has 4 intervals, apply loads of 0%, 25%, 50%, 75%, 100%, 75%, 50%, 25%, 0%. To avoid lag errors, record the observed readings after a sufficient period of stabilization. Note the calibration range may differ from the instrument range, the latter referring to the capability of the instrument (often greater than the desired measurement range or calibration range).

- Repeat step 6 to determine the repeatability of the measurements.

Frequency of Calibration

When should the calibration of a force measuring system take place? Of course, a measuring system should be calibrated prior to installation. Tacuna Systems offers load cell calibration services for its equipment prior to shipment. Customers receive a calibration certificate with all products purchased with this service.

After installation, system calibration should take place at a minimum once every two years. You should calibrate your measuring system more often if it is used frequently, if it must maintain “legal for trade” certification, or if it experiences harsh conditions. Most systems are calibrated annually, or when they exhibit potential problems. Comparing the calibration data from one year to the next can also indicate the need for more frequent calibration.

Conclusion

Calibration of a measuring system prior to any measurement is essential for reliable data collection. It is also part of an essential maintenance plan that extends a system’s useful lifespan.

The calibration process results in a calibration curve showing the relationship between the input signal and the response of the system. Information derived from the calibration curve includes system sensitivity, linearity and hysteresis. The final goal of calibrating the force measuring system is to ensure all its components work in tandem to produce a reliable measurement within acceptable tolerances. If a system will not calibrate to within these tolerances, Tacuna Systems recommends starting with the troubleshooting procedures outlined in How to Test for Faults in Load Cells.

For best results, Tacuna Systems recommends professional calibration services with the purchase of every load cell.

References

- The Calibration Principles.

- The Calibration Handbook of Measuring Instruments by Alessandro Brunelli.

- The Instrumentation Reference Book by Walt Boyes.

- A Guide to the Measurement of Force by Andy Hunt.

- The Standard Practices for Calibration and Verification for Force-Measuring Instruments.

- Force Measurement Glossary

- MechTeacher – Generalized Measurement System